Photo by Erik van Dijk on Unsplash

Terraform ecosystem.

An article about complementary tools to improve your IaC SDLC.

DevOps seem to become more popular than ever. Infrastructure as Code is an integral part of DevOps, at least that's what everyone has come to believe.

Actually, DevOps is not about the tooling

Nevertheless. Terraform is the de facto standard for infrastructure provisioning across clouds, platforms and solutions using a provider. While creating a few hundred lines of Terraform is where the process usually ends, there's more to the ecosystem to make the modules and components you write more robust, secure and scalable.

The most useful tools I've found during the past something ish years are:

- tfsec

Trivy

Terratest

- Terragrunt

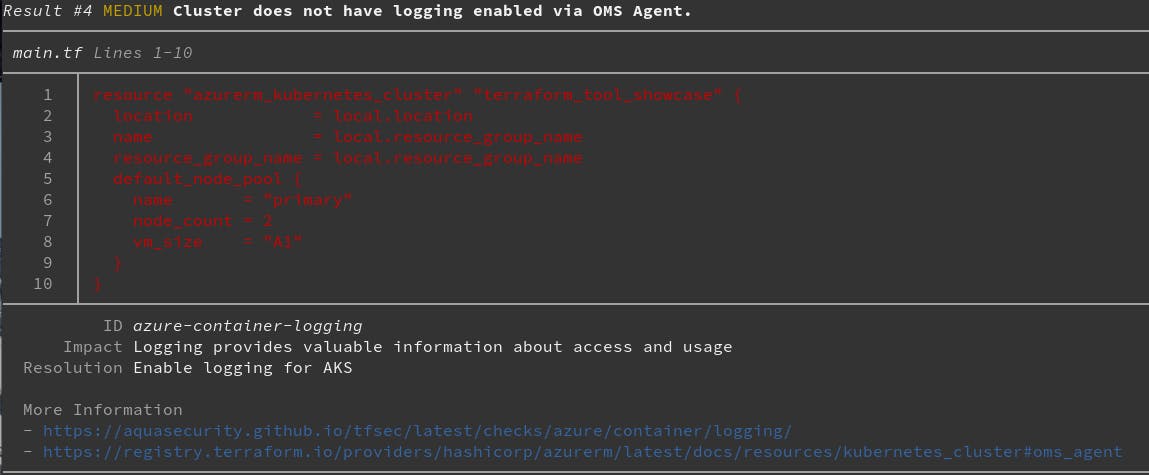

tfsec

This is a rather simple static code analysis tool. It takes a set of known security best practices for a list of providers.

Installation instructions can are available on tfsecs' website

After running the tfsec . inside the terraform directory, you will see an actionable list of vulnerabilities.

Add

--format jsonfor a parsable json output if you intend to use this report in any other way. For example, fail your CI pipeline if there are any Critical or High vulnerabilities.

{

"results": [

{

"rule_id": "AVD-AZU-0043",

"long_id": "azure-container-configured-network-policy",

"rule_description": "Ensure AKS cluster has Network Policy configured",

"rule_provider": "azure",

"rule_service": "container",

"impact": "No network policy is protecting the AKS cluster",

"resolution": "Configure a network policy",

"links": [

"https://aquasecurity.github.io/tfsec/latest/checks/azure/container/configured-network-policy/",

"https://registry.terraform.io/providers/hashicorp/azurerm/latest/docs/resources/kubernetes_cluster#network_policy"

],

"description": "Kubernetes cluster does not have a network policy set.",

"severity": "HIGH",

"status": 0,

"resource": "azurerm_kubernetes_cluster.terraform_tool_showcase",

"location": {

"filename": "/home/a/code/personal/tmp/terraform-poc/main.tf",

"start_line": 1,

"end_line": 10

}

},

// edited

]

}

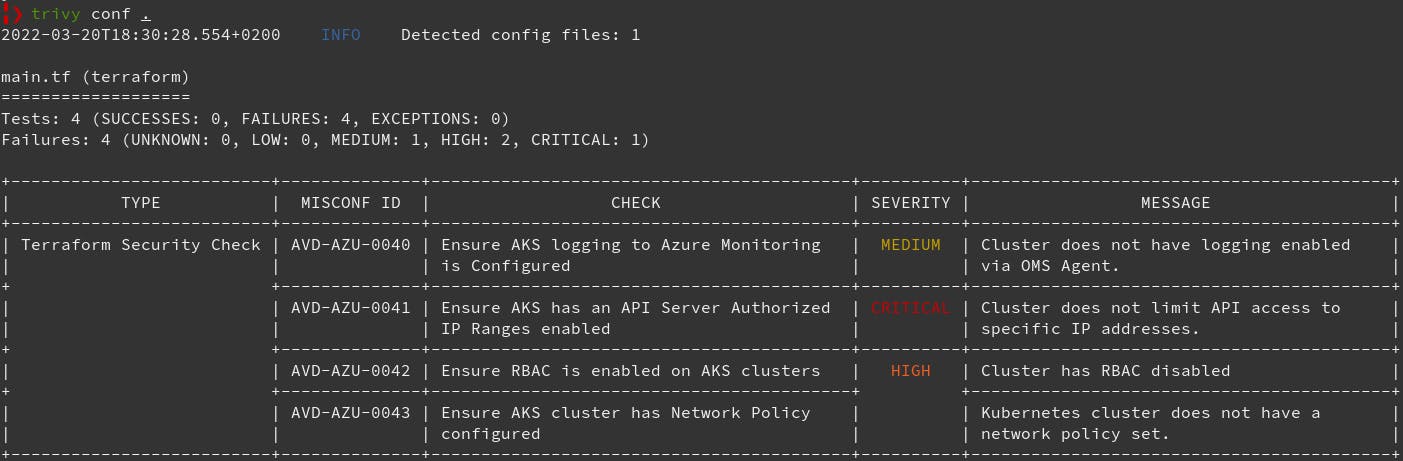

Trivy

If you want to narrow down the number of tools you use in your organization - use Trivy instead. It's a more powerful tool to scan filesystems, docker images and IaC configuration files. My personal favourite.

Installation instructions are available here

The default output is more compact giving you a better high picture overview of what's wrong with the IaC:

Trivy also provides an easier way of filtering the output with its --severity flag. --format is also there when its necessary

trivy conf --severity CRITICAL .

Terratest

This is by far the best infrastructure testing tool on the market (personal opinion). It's written in Golang, that's always a plus, right? It's not Terraform specific, check out Terratest examples to see what else it can do. It also doesn't just check that an IaC framework is capable of deploying infrastructure ( my thoughts on that are in my Infrastructure Testing in DevOps Teams Workflow article ).

Instead, Terratest allows you to do things to ensure that the configuration of your infrastructure is correct. You can (read as should) perform API calls against the infrastructure to ensure that, let's say, the firewall rules work or test Kubernetes RBAC roles after deploying something into a new namespace. The list goes on (check out those examples I mentioned before ).

And the best thing is - Terratest is polite, it will remove the entirety of infrastructure you told it to deploy if you ask it to.

Terratest Example Breakdown

package test

import (

"testing"

"time"

http_helper "github.com/gruntwork-io/terratest/modules/http-helper"

"github.com/gruntwork-io/terratest/modules/k8s"

)

func TestLocalIngress(t *testing.T) {

t.Parallel()

kubeResourcePath := "./test-app.yml"

options := k8s.NewKubectlOptions("", "", "foo-bar-app")

defer k8s.KubectlDelete(t, options, kubeResourcePath)

k8s.KubectlApply(t, options, kubeResourcePath)

k8s.WaitUntilIngressAvailable(t, options, "foo.artpav.here", 10, 10*time.Second)

k8s.WaitUntilIngressAvailable(t, options, "bar.artpav.here", 10, 10*time.Second)

k8s.GetIngress(t, options, "foo.artpav.here")

http_helper.HttpGetWithRetry(t, "http://foo.artpav.here", nil, 200, "foo", 30, 10*time.Second)

http_helper.HttpGetWithRetry(t, "http://bar.artpav.here", nil, 200, "bar", 30, 10*time.Second)

}

Here's an example Terratest test (doah) that I use to ensure my kind cluster and the local domain is configured and operational. Let's break it down.

t.Parallel() - we allow Terratest to execute tests in parallel

k8s.NewKubectlOptions - here we specify what namespace we want to deploy to. The function accepts 3 arguments, as you see. They are kubeContext, kubeConfigPath and namespace.

defer k8s.KubectlDelete - we use the awesome, incredible defer keyword inside golang and ask Terratest to clean everything up, even if the test fails somewhere in the middle.

Then, we apply everything using the options from before. Wait until the Kubernetes ingress resources become available.

k8s.KubectlApply(t, options, kubeResourcePath)

k8s.WaitUntilIngressAvailable(t, options, "foo.artpav.here", 10, 10*time.Second)

k8s.WaitUntilIngressAvailable(t, options, "bar.artpav.here", 10, 10*time.Second)

And perform GET requests targeting the ingress addresses, expecting code 200 with a "foo" and "bar" (we are deploying 2 applications after all) as the response. The Get requests are executed for 30 times, waiting 10 seconds between each call.

http_helper.HttpGetWithRetry(t, "http://foo.artpav.here", nil, 200, "foo", 30, 10*time.Second)

http_helper.HttpGetWithRetry(t, "http://bar.artpav.here", nil, 200, "bar", 30, 10*time.Second)

Bam! That was 30 lines of go code to deploy, test and delete a workload to a Kubernetes cluster. I know the article is about Terraform and yadi yadi but you can find Terraform examples on Terratests' github.

Terragrunt

tldr; You don't need Terragrunt 95% of the time. But when you do, it'll make your life so much easier.

The best thing you can do right now is to go to the Keep your Terraform code DRY that shows you the use case of Terragrunt.

If you're still here, then.

There always are 3 stages in creating Terraform code for any project.

- You write a lot of boilerplate code to deploy the infrastructure

- It becomes too big to manage in a single place so you extract different parts of your infrastructure into modules. And then glue them together in what I call components.

- You write a lot of boilerplate that consists of sourcing modules to deploy the infrastructure.

Terragrunt allows you to write that module part once, then reuse it whatever amount of times you want.

Here's an example.

You have modules that create a network, a kubernetes cluster, DNS zones, firewall rules and an application using Kustomize. Pretty common thing to have, right? You create a single Component that gathers all those modules to create an environment. The file structure might look something like that:

artpav-corp

|- modules

| |- aks

| |- main.tf

| |- variables.tf

| |- outputs.tf

| |-...

|- components

| |- environment

| |- main.tf

| |- variables.tf

| |- outputs.tf

Now. How do you deploy different environments for the project?

- You can handle the environments in different stages of your CI/CD pipeline. If you're doing that already and that works for you, auditors are happy and the risk of supply chain attack is not relevant. Stop reading this, you're golden.

- You use Terragrunt.

You create an environments directory and add terragrunt config files to it, providing a source of the environment component and the environment specific inputs.

terraform {

# Deploy version v0.0.1 in prod

source = "../components/environment"

}

inputs = {

kubernetes_cluster_node_count = 10

kubernetes_cluster_node_size = "m2.large"

artpav_corp_app_pod_count = 100

}

This way, your environment configuration is controlled through a version control and you're able to control every change to the infrastructure through PRs and have a log through your commit history. The only variable you'll ever need in your CI/CD pipeline stage is "environment".